Ethical AI in customer service ensures fairness, transparency, privacy, safety, and accountability in every interaction. It builds trust by addressing issues like bias, data misuse, and lack of transparency while promoting clear communication and human oversight. Here's what you need to know:

Quick Tip: Tools like Converso combine AI efficiency with human oversight, ensuring smooth handoffs and compliance with privacy laws.

Ethical AI is about creating systems that respect customer dignity while enhancing service quality. Let’s explore how to implement these practices effectively.

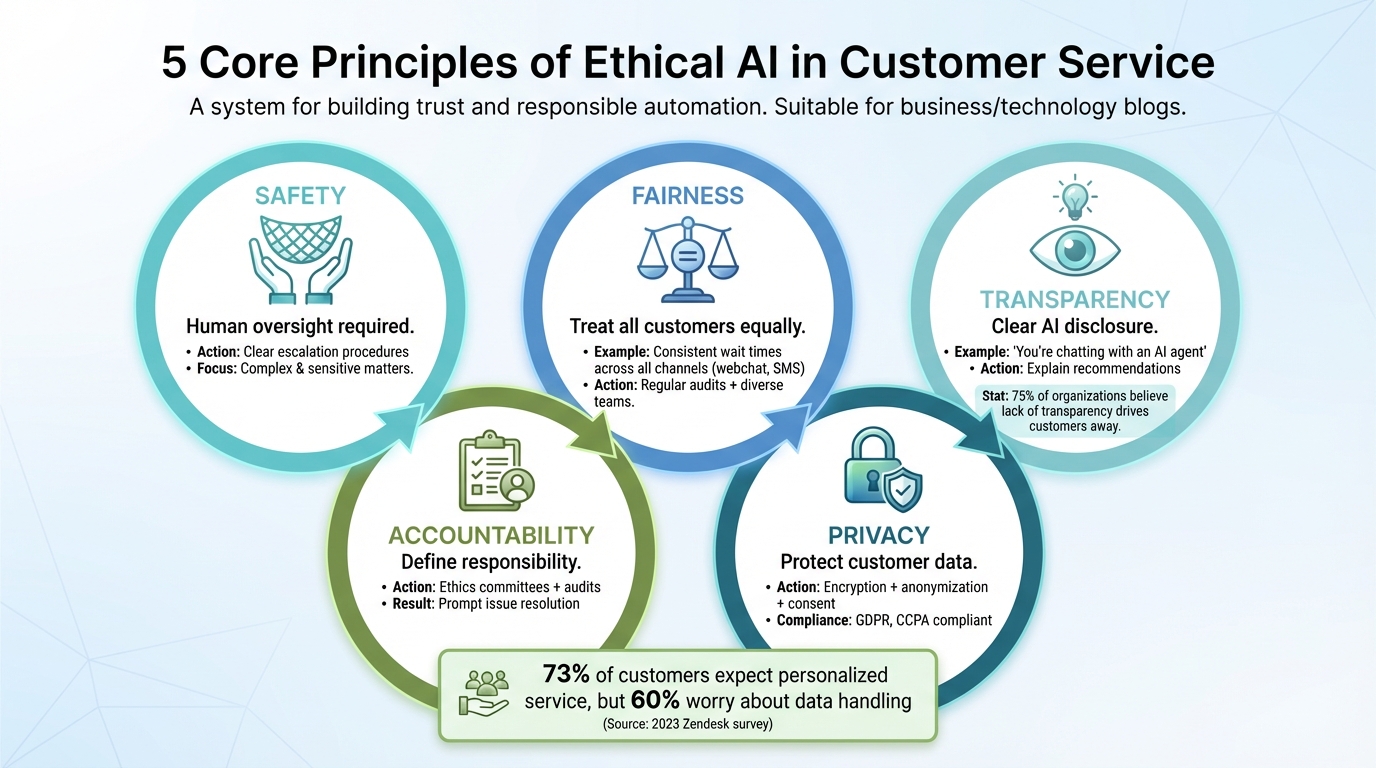

5 Core Principles of Ethical AI in Customer Service

Ethical AI in customer service is built on five main principles: fairness, transparency, privacy, accountability, and safety.

Fairness ensures that all customers are treated equally, regardless of their demographics or the communication method they use. For example, whether a customer contacts support via webchat or SMS, wait times should remain consistent. Regular audits and diverse development teams play a critical role in reducing biases.

Transparency focuses on making AI interactions clear and understandable. Customers should know when they are engaging with an AI system. For instance, a simple message like, "You're chatting with an AI agent", helps set expectations. If the AI recommends a product, it should explain why, such as, "This product is suggested based on your recent purchases."

Privacy protects customer data through methods like encryption, anonymization, and obtaining clear consent. Accountability involves defining who is responsible for AI-related decisions and ensuring issues are promptly addressed through audits and ethics committees. Finally, safety emphasizes the need for robust systems with human oversight and clear procedures for escalating complex or sensitive matters.

These principles form the backbone of ethical AI practices and pave the way for compliance with regulatory standards.

Various regulatory frameworks guide the ethical use of AI in customer service. The EU AI Act sets standards for risk management, transparency, and accountability, particularly for high-risk AI systems, and its influence extends globally. Similarly, the GDPR requires businesses to prioritize data protection, obtain customer consent, and implement privacy-by-design measures when dealing with European customer data [10][4].

In the United States, the California Consumer Privacy Act (CCPA) mandates that businesses clearly inform customers about how their data is used, allow them to opt out, and ensure secure data storage. Non-compliance can result in fines of up to $7,500 per violation [10]. Meanwhile, emerging federal guidelines are tackling issues like AI fairness, requiring audits and prohibiting discriminatory practices. For companies using platforms like Converso, GDPR compliance is supported through features such as secure, tamper-proof archives that store all customer interactions for easy auditing [1].

These frameworks provide the structure for ethical AI operations, which will be explored further in the next section.

To integrate ethics into AI policies, organizations should establish clear guidelines for data privacy, bias reduction, transparency, and accountability. Forming an AI ethics committee with representatives from IT, legal, and customer service teams is essential. This committee can review algorithm performance, analyze customer feedback, and oversee audits.

Policies should include well-defined escalation procedures, strict data handling protocols, and access controls. Employees must be trained to understand both the strengths and limitations of AI, ensuring they know when human intervention is necessary - whether due to inadequate AI responses or customer requests for assistance. Compliance with regulations like GDPR and CCPA is crucial, as highlighted by Converso's AI tools, which maintain message confidentiality and restrict staff access to sensitive customer conversations [1].

To make AI-human collaboration effective, it’s important to define who does what. AI is best suited for routine tasks: handling password resets, checking order statuses, answering FAQs, or scheduling appointments. These tasks demand speed and consistency, which AI excels at. On the other hand, human agents step in for more complex issues - ones that need judgment, empathy, or flexibility. Think of situations like billing disputes over $500, emotionally charged complaints, or decisions involving high-value accounts.

Clear escalation rules ensure these roles work together smoothly. For instance, if AI confidence drops below 80%, the issue should automatically transfer to a human agent. The same applies when customers repeatedly express frustration, explicitly ask for human help, or bring up topics outside the AI's expertise, like legal or compliance questions. Human agents also need training to recognize and address bias or errors that may occur during these transitions [7].

This “co-pilot” approach allows AI to handle high volumes while human agents focus on complex, high-stakes cases. By dividing responsibilities this way, businesses can prepare for seamless implementation with tools like Converso.

Converso takes an ethical approach to AI-human collaboration, ensuring fairness and transparency across all communication channels. Its shared omnichannel inbox brings together conversations from platforms like webchat, WhatsApp, and SMS into one unified workspace. When an AI agent escalates an issue, it transfers the full conversation history to the human agent. This means customers don’t have to repeat themselves, and agents have all the context they need to jump in effectively.

"Converso's AI agent has reduced the volume of insurance policy queries that the support team answer by at least 50%, through easy integration of our AI Agent with our sales support team. Together with the upcoming lead gen agent, AI has the potential to revolutionize our business!" - Aaron Valente, Director, Key Health Partnership [1]

Converso also allows for department-specific routing. For example, billing inquiries can go directly to the finance team, while technical questions are sent to product support. Each department has access to the right AI tools and human expertise, which reduces the workload for agents by ensuring they only handle escalations relevant to their skills. Meanwhile, AI takes care of first-line support across all channels [1].

This precise coordination between AI and humans not only improves efficiency but also ensures that every interaction is handled transparently and effectively.

Transparency is crucial - not just to meet regulatory requirements but also to build trust. Customers need to know upfront when they’re interacting with AI. A simple disclosure like, "You're chatting with an AI agent powered by Converso - type 'human' for live support", sets clear expectations and provides an easy way to escalate the conversation.

Studies show that clear labeling can boost customer satisfaction by 20–30% because it minimizes confusion and frustration [2]. Features like visible labels, straightforward escalation options, and concise summaries of AI decisions all contribute to a more ethical and transparent process. The goal is to make it as easy as possible for customers to reach a human when needed - no one should feel stuck in an automated loop when personal attention is required.

The first step toward ethical data handling is data minimization - only collect what’s absolutely necessary to address the issue at hand. For example, if a customer's birthdate or full address isn’t required to resolve their query, don’t ask for it. Limiting data collection and retention reduces potential risks.

Data security is equally important. Encrypt data both during transmission (e.g., using TLS) and when stored. Implement strong authentication protocols like OAuth 2.0 to secure data exchanges between AI systems and other platforms. Use role-based access controls to ensure that only authorized personnel can access sensitive information.

Converso takes additional precautions by segregating customer data through department-specific knowledge bases, reducing unnecessary exposure. All customer interactions are archived in tamper-proof repositories, ensuring compliance with regulations like GDPR [1]. Furthermore, access to specific customer conversations can be restricted to designated staff, maintaining confidentiality within your organization [1].

Once strong data practices are in place, the next challenge is addressing bias in AI systems.

Secure data management lays the groundwork for tackling bias, which is critical for ensuring fairness. Bias in AI systems can manifest in various ways, such as unequal wait times, inconsistent response quality, or misinterpreted sentiment across demographic groups [6]. Conducting regular fairness audits is a proactive way to identify and address these issues. For instance, analyzing conversation logs by customer demographics and tracking metrics like first-contact resolution rates or customer satisfaction can help uncover disparities.

Tools such as Fairlearn or the What-If Tool are useful for simulating interactions to detect bias in AI behavior. For example, if analysis reveals that non-native English speakers experience more escalations or older customers report lower satisfaction, it may indicate the need for retraining the model with more diverse data [7]. Engaging diverse teams in algorithm development and establishing a clear process for handling bias incidents - such as documenting issues, adjusting model prompts, retraining, and re-testing - can further reduce bias. Additionally, incorporating a human review for high-impact decisions, such as fraud detection or account closures, ensures that these critical cases are handled fairly and thoughtfully [10].

These actions work hand-in-hand with strong data practices to keep AI decision-making fair and balanced.

Transparency is the cornerstone of privacy and consent in AI-driven customer service. Customers should always know when they’re interacting with AI and understand how their data is being used. According to a 2023 Zendesk survey, while 73% of customers expect companies to use their data to personalize interactions, 60% remain concerned about how their information is handled [9]. Simple, clear notices - like stating that "this AI chat uses your email to assist you" - can help set expectations and build trust.

Handling personally identifiable information (PII) such as names, emails, phone numbers, or payment details requires extra diligence. Mask or tokenize sensitive data in logs and training datasets, and enforce strict retention schedules to limit unnecessary exposure [10]. Converso supports these efforts by allowing granular control over which team members can access specific customer conversations, minimizing risks [1].

Consent management is another critical piece of the puzzle. Ensure that opt-in and opt-out options are clear and accessible. For compliance with regulations like CCPA and GDPR, provide mechanisms for users - especially those in California or the EU - to access, correct, or delete their data. Conducting Data Protection Impact Assessments (DPIAs) for new AI features, particularly those involving profiling or large-scale monitoring, further strengthens privacy safeguards [8][10].

Creating a solid governance framework starts with assigning clear responsibilities. Set up a cross-functional AI ethics committee that includes members from customer service, legal, technology, and executive leadership. This team is responsible for overseeing ethical guidelines, reviewing AI projects for potential risks, and addressing concerns as they arise [2]. While business leaders shape the overall adoption strategy, the committee focuses on specific guidelines like data collection limits, bias detection protocols, transparency standards, and triggers for escalation when AI confidence falls below acceptable levels [2].

To put these principles into action, develop clear decision trees that guide employees, prevent misuse of data, and ensure customer consent is explicit [2][3]. Engage a broad range of stakeholders, including frontline customer service staff and even customers, to ensure policies address practical needs. Tools like Converso’s department-specific groups and secure archival features make oversight more tailored and audits more straightforward [1].

Once governance is in place, systematic monitoring becomes the next essential step.

Track key performance indicators like wait times, sentiment analysis, resolution rates, and escalation frequency to detect potential issues early [2][6]. Use tools such as the OpenAI moderation API to monitor AI interactions for hate speech or sensitive information. The NIST AI Risk Management Framework (AI RMF 1.0), introduced in January 2023, offers a structured method for documenting AI behavior, evaluating risks, and promoting transparency while minimizing bias in customer interactions [2].

Well-defined escalation processes are a natural extension of strong governance. Establish clear protocols for situations like low AI confidence scores, detected bias, regulatory concerns, or emotionally sensitive interactions [2][5]. Converso’s seamless handoff feature ensures that when human agents take over, they have access to the full conversation history and context. This maintains continuity and allows for expert review of flagged interactions [1]. Equip your human agents with training on AI collaboration so they know when and how to step in effectively.

Continuous monitoring provides the insights needed to refine AI operations. Regularly review conversation transcripts to assess AI performance, ensuring the right tone is used in challenging customer interactions. Conduct periodic audits to align with updated standards and incorporate feedback loops from human agents who can identify recurring issues or areas where AI responses fall short [5][6].

Converso’s shared team inbox centralizes all communication channels, simplifying transcript reviews and revealing opportunities for improvement [1]. Use these insights to fine-tune AI prompts, retrain models with more diverse data, and update your knowledge base. Additionally, Converso’s upcoming AI-driven trend analysis feature will help identify emerging issues early, allowing you to address them before they impact a larger audience [1]. While governance and monitoring lay the groundwork for ethical AI, continuous improvement ensures systems remain accurate, fair, and aligned with ethical standards.

Ethical AI in customer service hinges on six main principles: transparency, fairness and bias mitigation, privacy and data protection, accountability and governance, human oversight, and user consent and control. These principles ensure a trustworthy and balanced approach. Customers should always be informed when interacting with AI, and they must have a clear path to reach a human, especially when dealing with matters involving finances, safety, or complex concerns. Regular bias audits, strict data minimization practices, and well-documented escalation processes are crucial for protecting both customers and businesses. The White House Blueprint for an AI Bill of Rights reinforces these expectations for U.S. companies, emphasizing the importance of safe systems, protection from algorithmic discrimination, data privacy, clear communication, and access to human alternatives [9]. Together, these guidelines create a framework where automation complements human expertise.

Striking the right balance between AI and human involvement is essential for long-term success. AI excels at handling routine tasks like password resets, order tracking, and FAQs. Meanwhile, human agents step in for more complex and high-stakes issues, such as billing disputes or account closures. This division of responsibilities not only boosts efficiency but also strengthens customer trust. Research indicates that 65% of business leaders believe AI is becoming more human-like, making transparency and an easy handoff to human agents more important than ever [9]. Without a smooth transition to human support, customer trust can erode.

Converso applies these ethical principles by blending advanced technology with human oversight to enhance customer service. Features like multiple workspaces and inboxes keep teams and customer segments organized, ensuring AI agents only access the data they need. A seamless handoff feature ensures that when a human takes over, the full conversation history is preserved, enabling context-rich and uninterrupted support. The shared team inbox centralizes communication channels such as webchat, WhatsApp, and SMS, making it easier to review transcripts, oversee interactions, and conduct bias checks [1].

Privacy is another priority. Converso offers GDPR-compliant, tamper-proof archiving and strict confidentiality settings for messages [1]. These features align with U.S. privacy laws, including state-level regulations like CCPA. By automating routine tasks while keeping humans involved where it counts, Converso helps businesses deliver fast, accurate service while adhering to the ethical standards customers expect.

To reduce bias in AI-powered customer service, businesses should prioritize a few essential practices. First, use diverse and representative training data so the AI can learn from a broad spectrum of situations and customer demographics. Regularly audit and evaluate AI responses to spot any unintended patterns or biases. Additionally, employ bias-mitigation algorithms and keep a close eye on performance to make necessary adjustments over time.

These measures can help businesses build fairer, more inclusive AI systems that improve customer interactions while upholding ethical principles.

Ethical AI in customer service is shaped by several key regulations that emphasize transparency, fairness, and accountability. For instance, the California Consumer Privacy Act (CCPA) focuses on protecting consumer data privacy in the U.S., while the General Data Protection Regulation (GDPR) provides a robust framework for data protection across the European Union. In addition, new federal guidelines in the United States are emerging to set standards aimed at ensuring fairness and reducing bias in AI systems.

For businesses using AI to interact with customers, understanding and adhering to these regulations is critical. Not only do they help protect customer rights, but they also play a vital role in fostering trust and upholding ethical business practices.

Blending AI-driven tools with human expertise transforms customer service by tackling repetitive tasks, delivering faster responses, and allowing human agents to focus on more nuanced or sensitive matters. AI ensures swift and precise answers, while human agents step in effortlessly when a personal touch is required. Together, they create a seamless and tailored experience for customers.

This partnership doesn’t just improve efficiency - it strengthens trust. Customers appreciate the speed and accuracy of AI, combined with the empathy and understanding that only humans can provide. The outcome? Happier customers and deeper loyalty.